The first time we saw Face ID on the iPhone was with the iPhone X in 2017. It uses 3D Facial Recognition technology for authentication. You can make transactions and unlock your iPhone, and it’s integrated well throughout the UI. Even third-party apps can take advantage of Face ID.

Implementing Face ID under the display is taking a long time. In this article, we’ll try to explain when it could happen on the iPhone and how Apple is planning to achieve under-display Face ID.

How does Face ID work?

Face ID uses what Apple calls the True Depth camera. The entire front camera array and the Face ID module feature three key components.

Apple uses infrared light to illuminate your face, even if it’s pitch black. This is what they call a Flood Illuminator. These sensors also do the work of depth sensing for the front camera.

Another component is the dot projector. It projects about 30,000 precise dots of infrared light on your face, and an infrared/ 3D camera finally reads all of this data.

Apple’s Neural Engine uses Machine Learning algorithms to identify your face. Face ID improves over time since the neural engine becomes more competent at recognizing your face.

Eventually, your phone will unlock with sunglasses or hats. It’ll still unlock if you grow facial hair, apply makeup, or change your hairstyle. You can manually set up Face ID to recognize you with a pair of glasses.

Recently, there’s also the new Mask ID and the phone will unlock even if you use a mask if you set it up in the Face ID settings.

According to Apple, this data about your face and all the information the infrared camera reads is stored in the Secure Enclave. The phone is attention-aware, so it can detect when you’re looking at it and when you’re not.

If you turn the attention-aware features off, you risk losing a lot of security that Face ID offers. The current security standard of Face ID is one incorrect authentication out of a million authentications. That’s better than the 1 in 50,000 for Face ID.

However, Face ID isn’t as secure with identical twins since they share many similar facial features and structures. The neural engine could overlook the tiny differences.

Under-Display Face ID technology has been delayed

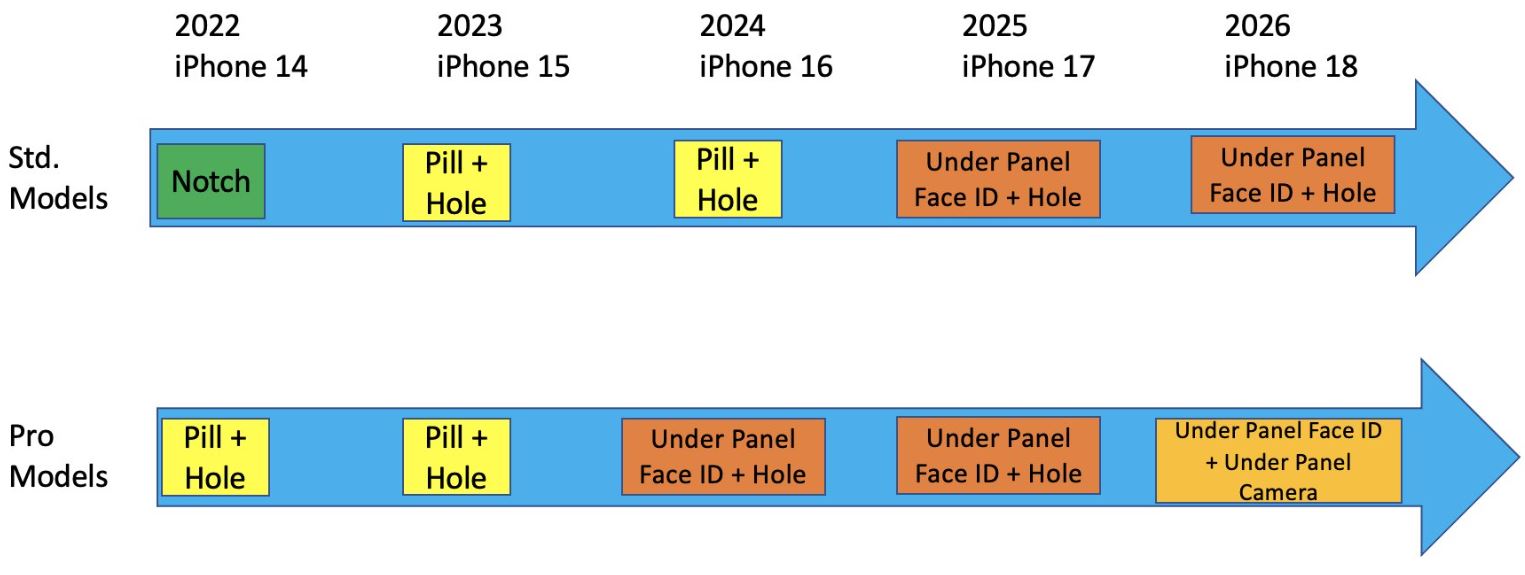

- We might have to wait till 2025 to see an iPhone with an under-display Face ID technology.

Reputable analyst Ross Young conveys that Apple isn’t going to use under-display Face ID for 2024 iPhones.

According to the original roadmap, Apple was supposed to move to a punch-hole style design with a couple of components under the display in 2024. However, the new roadmap says it’s pushed back by one year, probably because of sensor problems.

Since the plans are delayed by at least one year, the iPhones that come out in 2024 will not have all Face ID components under the display.

One major downside of Face ID is the annoying display intrusion, otherwise known as the notch. The notch on the top is the Face ID module, which houses all the essential sensors for Face ID and the front camera.

The notch on the iPhone X was rather large and took up a lot of screen real estate. With the iPhone 13 series, the notch size shrunk. However, there are other problems with making the notch smaller.

Despite being smaller horizontally, the new notch is taller vertically. This means it cuts into much YouTube content, completely spoiling the multimedia experience. It’s not suitable for gamers, either.

If a particular game doesn’t have optimization from developers, the notch will interfere with the content. The new dynamic island makes this even worse.

What’s causing the delays in Under-Display Face ID?

Ross Young didn’t elaborate on the reasons for these delays; we can only make some assumptions.

Sensor issues could refer to the depth sensor and dot projector not working well during testing. Apple doesn’t usually release half-baked products, so the results were probably not satisfactory enough for them.

However, it’s worth noting that Samsung on the S10+ tried something similar. It wasn’t as fast, accurate, or secure as Apple’s Face ID. A time-of-flight sensor could unlock your phone with better security than a typical 2D face-unlock system.

The S10 5G still didn’t have a dot projector or infrared scanner/ flood illuminator for precise and accurate scanning. Still, it was a good attempt at showcasing face-unlock without the annoying display intrusions.

Apple’s Face ID isn’t as secure as the company makes it out to be. Keeping the apparent problems with twins aside, it still unlocks in the dark with two slightly similar-looking faces.

This is an issue with how Face ID fundamentally works. The Machine Learning technology automatically adapts to changes in your face. Because of this, it may unlock with another slightly similar-looking face, especially when there’s little light.

Security concerns aside, there are other inherent problems with Face ID, too. It isn’t as convenient as a fingerprint scanner since you must stare at the phone’s top portion to unlock it.

There’s still no easy way to bypass swiping up to unlock, and it doesn’t work at many angles.

If you have your phone on a table next to you and want to unlock it without picking it up, you must move and position your face so the sensors can scan it.

Face ID improvements

The iPhone’s hardware and sensors for Face ID have stayed the same since 2017. Every year, we see new chips with faster neural engines, though.

Since the neural engines are better at recognizing faces and depth mapping, Face ID gets slightly faster yearly. It also works at more angles with the newer iPhone models and better adapts to changes in your face.

iPhones with the A15 and A16 Bionic chips can also unlock your iPhone with Face ID in landscape mode. That isn’t possible with the A14 and under, probably because it needs more machine learning and neural engine tech.

Either that or Apple didn’t want to enable the feature for all iPhones. The A15’s NPU is still really good, so there could’ve been security reasons.

If we see under-display Face ID in the future, it’ll mostly have better sensors than the existing ones to allow unlocking at more angles.

What about Touch ID?

Apple is still researching under-display fingerprint technology. We’ve seen it for ages at this point on Android phones. It began with simple optical scanners, but we now see ultrasonic ones under the display.

When Apple finally decides to use under-display fingerprint scanners, it’ll probably have other functions too. Many analysts assume Face ID will be the norm, but we’re unsure.

Many patents Apple owns make Face ID challenging to copy for other companies. When Apple reintroduces it, the new Touch ID Gen 2 might have faster speeds.

We expect vein detection and a heart rate sensor with it, too. This is similar to Samsung’s previous tech, though they, unfortunately, don’t do heart rate detection with their latest generation of flagship phones.

They also might support Blood Oxygen tracking. If Apple uses an optical scanner, it won’t impress anyone, but an excellent ultrasonic scanner is more secure and covers more screen area. This makes it easier to unlock.

It’s less prone to fail with wet fingers or gloves. Apple did test the under-display Touch ID for some iPhone 13 models but didn’t move forward since they probably couldn’t achieve the consistency standards.

Conclusion

There are many functional problems with using a notch or a dynamic island, and the design aspect isn’t appealing to many. The dynamic island interferes significantly with multimedia, and the interaction is not user-friendly.

To interact with the dynamic island for multitasking, you must keep reaching the phone’s top. This is not in line with making one-handed use better, which was the theme of iOS 16.

If you continuously reach for the top of the phone, it keeps smudging the front camera. The front camera is also in the dynamic island pill, so the quality of photos won’t be as good if you keep smudging the lens with your fingers.

Since the new UI design of the dynamic island cutout isn’t user-friendly and also interferes with content, it’s no surprise that we’re all waiting for Apple to either move to a punch hole or an under-display camera.

Unfortunately, we might have to wait until 2025 for a punch-hole design with many components under the screen. If Apple doesn’t sort out the issues, we might have to wait until 2026 for a punch-hole design.

If there are further delays with the implementation, we won’t see an iPhone with no display interruptions until 2027 or, in a worst-case scenario, even beyond.

Note: We’ll update you if there’s further information about Face ID under the iPhone display.